This is the second (and last) part of my write-up on how 8088 Domination was achieved; the first part is here. I reuse some terminology introduced in the first part, so before we continue, it’s worth reviewing some key definitions to avoid confusion:

Delta: An area of bytes that needs to change in screen memory to update the displayed image

Slice: A delta where not all bytes are the same value

Run: A delta where all bytes are the same value

On to the nitty-gritty!

Breaking With Tradition

If you’ve coded video or animation systems in the past, you may have correctly identified what I’m doing as less of a video codec and more of an animation system. Animation systems from the 1980s such as Autodesk Animator or DeluxePaint Animation store and play back deltas by iterating through data that describe what areas of screen memory to change, using codes and chunk types for things like “skip N pixels forward, then change M pixels”, “fill entire screen to solid color N”, and so on. This reduces the size of the file, but requires some decision-making and branching while iterating through the data.

I initially did the same thing, and wrote a fast routine that would iterate through a list of deltas to replay, handling runs using the efficient REP STOSB sequence, and the rest with REP MOVSB. It looked something like this:

Delta format:

0-1: start offset

2: length in upper 7 bits and run/slice in LSB. If set, is run.

3: fill value (if run; unused if slice)

4-N: data (if slice)

Decompressed via:

; Prior setup:

; DS:SI=source data

; ES = destination (screen RAM)

; DX = number of deltas to iterate through

@loopit:

lodsw ;load offset

xchg di,ax ;get destination ready

lodsw ;load bit, length, value

shr ah,1 ;isolate LSB?

mov cl,ah ;move length into place

jc @run ;if so, it's a run

;runs are the exception; slices should fall though first

@slice:

rep movsb ;copy slice to screen

;okay to fall through here since cx=0, rep stosb will do nothing

@run:

rep stosb ;replay run to screen (AL already has value)

@continue:

dec dx

jnz @loopit

This is optimal 8088 code for this operation, but the idea has two problems. First is a minor annoyance; a byte is wasted storing a fill value even if we aren’t handling a run. But the real problem is that there are two branches (JC and JNZ) for every delta we iterate over in the list. Branches are costly on almost all CPUs, even those as old as the 8088. This was a huge concern for me, as the average new image in my test material was made up of roughly 600 deltas, most of them slices. Some quick math to illustrate why this concerned me:

# of cycles available to us to paint a frame: About 40,000

# of cycles taken up by one untaken (JC) and one taken (JNZ) branch: About 24

# of cycles used by branches to replay 600 deltas: 14,400 (36% of our total)

So, in a typical frame full of changes, more than a third of our available CPU time is wasted handling branches. In a system where we have the same time quantum as 8088 Corruption but are trying to change more data than it did, this was a big step in the wrong direction!

I thought of a few ways to mitigate this cost, such as unrolling the loop, rearranging deltas so that slices and runs are grouped together, and so on. This went on for about an hour before inspiration struck: Why not eliminate the branches altogether?

And just how the hell do you do that? By generating code instead of data. Instead of having the encoder spit out a description of what changes need to be made each frame, we switch to generating x86 opcodes that, when executed, directly implement the changes themselves.

This is the same strategy used to accelerate sprite plotting on many architectures, but when I realized I’d be doing the same thing for the entire screen, I started laughing out loud. What a ludicrous idea! And yet, in practice, you can see that it works.

It’s A Compiler!

The basic structure of a video “code” frame looks like this:

Startup code: Sets ES to point to the screen and DS:SI to point somewhere below its own instruction stream to where the data stream starts

Instruction stream: Instructions that re-point DI to new screen memory destinations and then issue combinations of MOV, MOVSB, STOSB, REP MOVSB, or REP STOSB to change screen memory contents

Cleanup code: A single RETF instruction to return to the caller

Data stream: For (REP) MOVSB, data that gets moved to areas of screen memory

As long as the code is aligned to a DOS 16-bit paragraph boundary, it will execute properly, so the player code enforces alignment of the frame data to paragraph boundaries. Not doing so results in hilarity, as the correct screen memory locations will be changed properly, but with data from the wrong place:

(It is, of course, quite possible to rewrite a few values in the code to get it to execute properly wherever it is located, but I didn’t want to perform code fixups realtime at 60hz — the system is already slow, let’s not make it any slower.)

Because the instruction stream adds size and processing time to the video data, it’s important for us to generate optimal code that is as fast as possible without being too large. For example, if you want to set a single byte in an arbitrary location pointed to by ES:, most x86 asm programmers would do it like this:

ES: MOV BYTE PTR [nnnn],val

This is fast and is 5 bytes in size. But if you have your value pointed to by DS:SI, you can also do it like this:

MOV DI,nnnn MOVSB

This is also 5 bytes (4 opcode, 1 data) but is slightly slower… but because MOVSB advances DI automatically, it can save you from having to do the same thing manually. For a single byte it’s not a win, but what if we have three bytes to change? We can continue to set them directly:

ES: MOV WORD PTR [nnnn],mmmm ES: MOV BYTE PTR [nnnn],mm

…or do this instead:

MOV DI,nnnn MOVSW MOVSB

The latter method is much smaller and slightly faster. (This can go on for a while, but eventually there is a break-even point where switching to REP MOVSB is faster than all other encodings.)

Although I had worked out most optimal combinations for various slice and run durations, in the end I felt it was better to just have the compiler generate every code variation, calculate how many cycles each one took to execute, and pick the fastest one. (I figured it was safer and more future-proof than me trying to hand-optimize generator output.) Calculating cycle counts for the 8088 is almost as easy as it is for 8-bit CPUs; the 8088 has only one core, no cache, no threads, no pipelines, no out-of-order execution… it does have a prefetch queue, but it is only 4 bytes long so it isn’t very effective. The major factor in optimizing 8088 code for speed is minimizing memory accesses, because the CPU takes 4 cycles to read (or write) a byte — any byte, even instruction stream opcodes. So, in most cases, the smallest code usually wins. The only exceptions to this rule are instructions that take an extremely long time, such as MUL/DIV, which can run for over 100 cycles depending on the operands.

Andrew Jenner, a good friend and a better coder than I am, has an excellent rule of thumb for determining 8088 code execution speed: Multiply the size of the opcode and the size of any data touched by that opcode by 4 for an informal cycle count; then, also determine the sum of each instruction’s published cycle count. Whichever number is larger is the more accurate execution time estimate.

I won’t go over the code generator itself in this write-up because it is very mundane and not terribly exciting; refer to the source code when I release it in a few weeks.

Delta Optimizations

Once I had an idea of the code generation cost, I came up with a couple of ways to reduce that cost by manipulating the delta list before it was processed by the encoder. Less work for the compiler to do meant smaller code/data and faster execution. Delta optimization consists of four phases:

- Run Identification and Splitting. Because runs process faster and encode much smaller than slices, it is a huge win to identify any runs hiding inside of slices and split them out into their own delta. This phase also marks any runs it finds as excluded from further processing (“frozen”), as runs are already optimal.

- Pixel “Shaving”. Changing only a single byte in screen memory has a very high cost (5 opcode bytes, plus the time they take to execute) so pixel “shaving” looks at each single-byte delta to determine how many pixels are actually changed by the byte. If a particular threshold is not met (ie. “more than one pixel”), the delta is dropped completely. This is a user-configurable option and is off by default.

- Delta “Shaving”. Identical to pixel shaving, except entire deltas are dropped if they aren’t large enough. The default threshold is “more than two bytes”; anything smaller is dropped. This is also user-configurable, and also off by default.

- Delta Combination. This phase looks for deltas that are spatially close to each other in linear memory and combines them together if the end result would encode as less bytes. For example, assume we have three 1-byte deltas all spaced one byte apart. Also assume that replaying these three deltas costs 5 bytes each, for a total of 15. Now consider what happens if we combine all three deltas into a single delta spanning the three changed bytes: The number of bytes changed onscreen will grow by 2, but we shed 10 bytes because we only have one delta to set up and replay. It is a net win, so it is always worth it to try to find combination opportunities. (This is technically an NP-hard problem, and implementing it quickly and stupidly as an exhaustive search greatly slowed down the code. I optimized it by re-sorting the deltas by start offset, so that the search space is localized around the delta(s) being examined. After all the combinations are found, the deltas are re-sorted back into the order that assists the encoding phase, as described earlier in part 1.)

All of these phases reduce size and execution cost. The pixel shaving and delta shaving phases have the added benefit of cleaning up the source video a little; if a pixel is “shimmering” over time due to being right at the error threshold of the dithering process, it will be “stable” with pixel or delta shaving turned on. The drawback to the shaving phases, however, is that the output can leave “trails” onscreen as smaller changes are never fully cleaned up or overwritten. Use with caution.

(While not benefiting optimization, there is also a prep phase that executes before the other phases and performs oversize delta splitting, which takes deltas that are too large to execute within our available cycle pool and breaks them up into smaller deltas. This is always necessary when the entire screen changes, as this creates a delta list that consists of only one single delta with a start offset of zero and an end offset at the edge of screen memory. A delta that big is way over both the available byte and cycle limits, so it has to be split into smaller chunks to be replayed over multiple passes.)

Playing With Variable Packets

The player for the 8088 Domination FMV data is very similar to 8088 Corruption: By controlling the size of the audio data the soundcard interrupt handles, we can get the interrupt to fire at our desired video framerate and use the opportunity to update the video as well. The interrupt handler pulls data out of a queue and updates the screen at the same time it updates the audio. While the interrupt is firing in the background, a foreground loop is constantly reading from disk and putting data into a queue. I cover this in more detail in 8088 Corruption Explained, so if you have a half hour to kill, I highly recommend snagging the MPEG-2 file (best quality) and watching it.

Where the players differ, however is in two areas:

- Instead of moving video data to screen RAM, the Domination player CALLs the video frame code, which executes and then returns

- The read-and-fill-memory loop, as well as the interrupt handler pointer management code, deals with variably-sized video+audio packets; this is because the output of the encoder varies in size based on how many changes are present from frame to frame

Two changes were made to the muxed video+audio stream for Domination that not only enabled handling variably-sized packets, but also sped up disk reads. The first change was to align each packet of video+audio data to disk sector boundaries, which sped up disk reads due to the way DOS handles buffering: DOS will normally transfer disk requests into its own internal buffers (if you’ve ever wondered what the BUFFERS= line in CONFIG.SYS was for, now you know) and then copy to the caller’s buffer. However, if the caller requests reading a sector-aligned offset (and amount) into a normalized paragraph-aligned pointer, DOS is smart enough to instruct the BIOS to transfer the data directly to the caller’s buffer. This made disk reads return a little quicker, as DOS’s usual double-buffering step was avoided.

The second change to the player was to keep track of how large each video+audio packet was. Rather than put size headers before each chunk, or scan the entire file before starting to determine sizes, I chose to write an index to the end of the file stream. The index consists of one byte per video+audio packet, where each byte indicates the size of the packet in sectors; this is possible because each packet is guaranteed to be aligned to sectors. (This limits the size of each packet to (255*512) = 127.5KB, but we will never see a single frame that large in practice; in fact, we will never see a packet larger than 64KB because that is the 16-bit real-mode segment size limit.)

The most amount of time I spent enhancing the player for the Domination format was, to my surprise, the memory handling. The original player used a circular buffer (a FIFO queue) that fed disk data into the “head” while the interrupt handler grabbed data from the “tail”. Typical circular buffers are divided into fixed-size blocks, but I had just switched to variably-sized packets. I needed a FIFO that could:

- Accept variably-sized allocations/deallocations

- Manage a linear area of memory with wraparound without requiring that area to be a power-of-two length

- Be interrupt-safe (ie. no locks/mutexes/semaphores required to put data in or pull data out)

- Always return paragraph-aligned normalized pointers

- Perform all of the above while dealing with 16-bit real-mode segment limitations

In the end, I wrote code that was not so much a typical circular buffer, but more of a heap manager where the caller “reserves” an amount of memory, receives a pointer to an area they can use, fills it with data, and then “commits” the area. Prior commits can be retrieved in FIFO order using another function. The design is interrupt-safe because reserves and commits don’t touch the data structures that are used by retrievals, and vice versa. I know it sounds stupid to be proud of a data structure, but I was pretty pleased with myself when I finished implementing it. (I’ve since learned there is a similar construct out there called a bip buffer, but a bip buffer wastes more memory and time than what I came up with.)

In Search Of Test Material

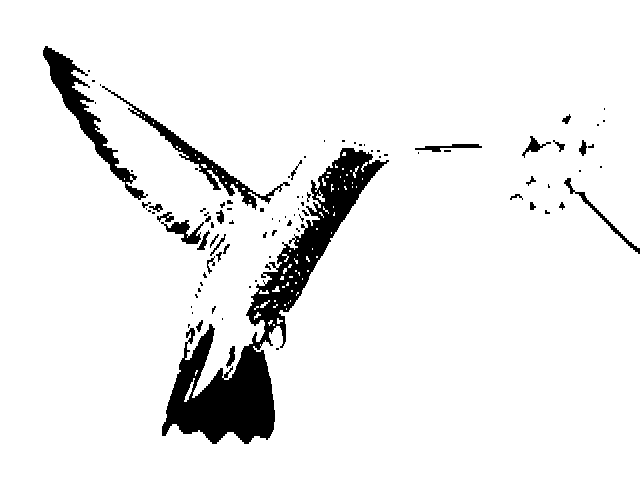

With two fully-functioning video modes supported by the encoder, I now had to choose some test material to show it off. For the color portion, I decided to use exactly the same footage that I’d used 10 years earlier with Corruption, so that people could directly compare them and see for themselves that the newer method was better overall. For the B&W portion, I had difficulty picking material; I was about to go with one of the old Apple silhouette-style ipod/itunes commercials until I saw a Game Sack episode where a homebrew Genesis cart was shown playing the Bad Apple animation. I was hooked — it was perfect test material. High-contrast shadow puppetry lent itself very well to my “animation compiler” because, most of the time, very little is actually changing per frame, and what is changing has very clean residuals.

Finding a clean source of the Bad Apple animation proved more difficult than I thought it would be. Every youtube version had resizing or transcoding artifacts, so after some research I found the original japanese video-sharing site it originated from and grabbed it from there, which resulted in an extremely clean 30fps source to work with.

Conclusion

8088 Domination may be my best work; I’m very proud of the result. I had to think creatively and unconventionally to solve problems. If people are considered artists based on the uniqueness and viewpoint of their output — paintings, novels, musical works — then I’d like to think programmers can be artists too, and judged by the same conventions.

I want to fix a few bugs in the source and tidy it up, and once I’ve done that I will release the source, executables, and documentation so that you can create your own videos using the system. Until then, enjoy a video of the competition I showed it at, complete with audience reaction:

You must be logged in to post a comment.