2026 is the 30-year anniversary I would consider myself having been directly involved in computer/video game archival from a preservation and outreach standpoint. From the early days building up the abandonware movement, to co-founding MobyGames, to continued involvement with the Total DOS Collection, to being a vocal advocate of cycle-accurate emulation and archival best practices… I’d like to think I’ve helped a little bit. There were times when I unsuccessfully tried to make software archival a career, only to have Real Life(tm) step in and force me away from it. Still, I’ve tried.

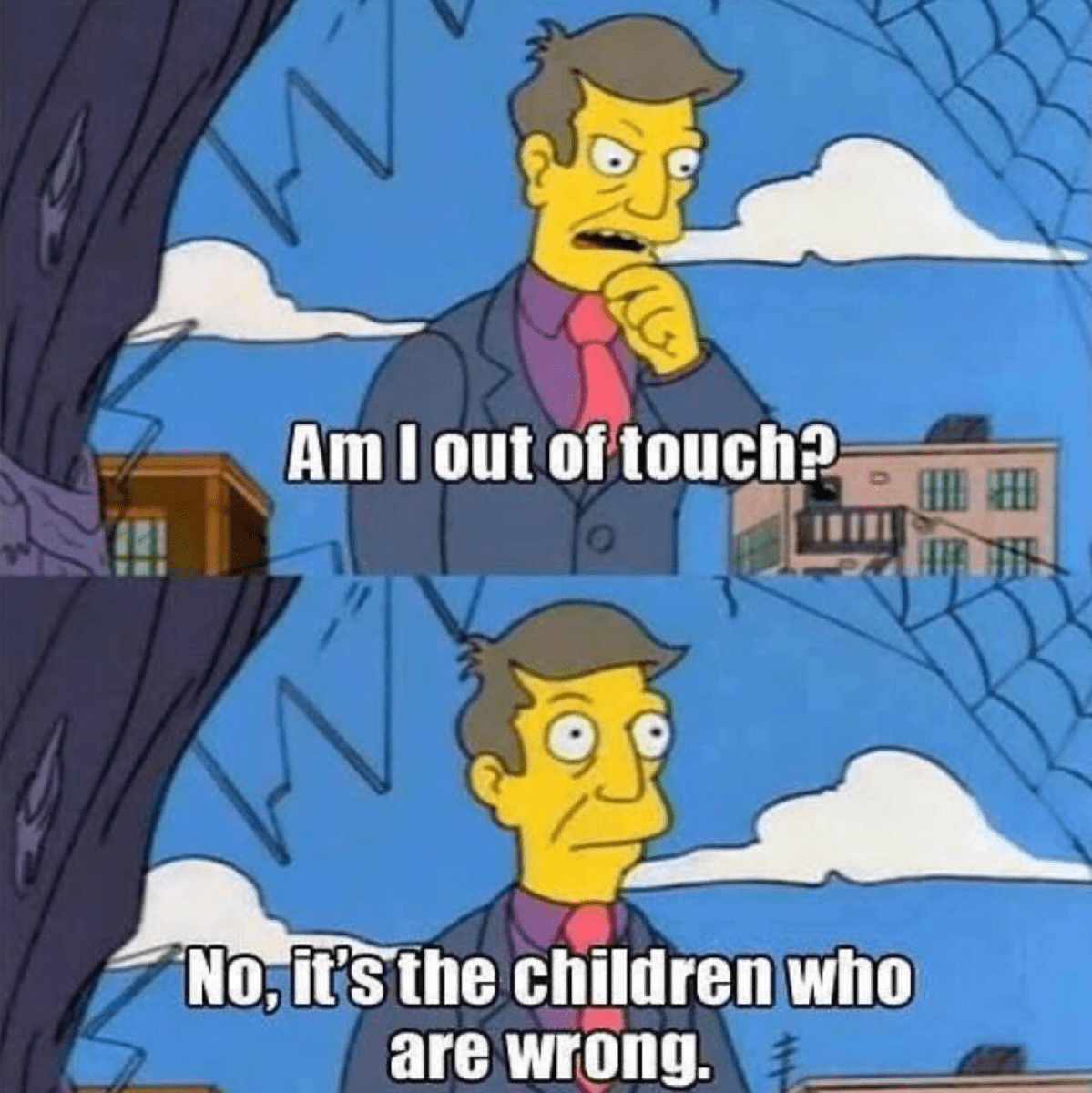

Once in a while, I feel the need to comment on software archival; something punches my imposter syndrome unconscious, and I gain the confidence to speak up and get involved again. But instead of being Old Guy Shakes Hand At Cloud this time, I decided to step back and get a feel for how things are in this space before getting involved. Am I still doing it right? Would I make a fool of myself recommending a practice that has since been debunked, a person who has since retired, or an organization that is sunsetting? I decided to get caught up by taking a crash course on what the Video Game History Foundation is doing, and since I can listen to podcasts easily during my commute, I decided to listen to their podcast, the Video Game History Hour.

When I say “I decided to listen to it”, I meant all of it. All 150 episodes, plus the bonus episodes (they have since continued with Episode 151). From the introduction of Kelsey, to the departure of Kelsey; from Frank as a host, to Phil as a host; from covering videogame history, to the more inward meta activity of “covering videogame history” itself. I’ve enjoyed consuming it over the past six months of listening, despite overwhelming FOMO sadness on my part.

I took notes. A lot of notes. And organized them into recurring themes. While my goal was to extract what the current state of best practices are, I learned more about the institutional side’s trials and tribulations than I had previously assumed. While I didn’t always agree with some of the viewpoints expressed, I’m grateful for the exposure.

What follows is my “best practices executive summary” of the first 150 episodes of The Video Game History Hour. This was originally meant to be a cheat sheet for just me, but — inspired by Ep. 102: Preservation: How Do I Start? — I thought it would be worth sharing as a primer for anyone else who would like to start learning about the crazy world of saving gaming history. I sincerely hope this is helpful to someone.

Everything that follows this sentence is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0). I will make no further edits, but encourage anyone to copy, redistribute, modify, remix, transform, or build upon it as they see fit.

Comprehensive Best Practices and Procedures for Video Game History and Preservation (as gleaned from The Video Game History Hour)

Digital Archiving and Data Recovery

Technical Standards and Verification

- Bit-Level Imaging: Prioritize bit-level disk imaging for all fragile media (floppies, CD-Rs, magnetic tape) using professional tools like KryoFlux, greaseweasle, applesauce, etc. For laserdisc or analog videotape, shoot for using a Domesday Duplicator, although 4:2:2 uncompressed YUV is acceptable.

- Bit-Accurate Verification: Confirm “good dumps” by comparing results across multiple hardware/software combinations. Use checksums (MD5/SHA-1) to ensure the digital image perfectly matches the original state, and/or other known good images.

- Standardize Version Control: Explicitly document ROM revisions, regional variants, and “staged” localizations. Acknowledge that “Version 1.0” code is often an incomplete record of a game’s lived history.

- Forensic PCB Documentation: Include high-resolution photography of circuit boards and handwritten labels. Labels provide essential provenance (e.g., “Lot Check,” “E3 Build”) that is missing from the binary.

Source Code and Development Data

- Preserve the Full Repository: A source file is an artifact, but a “buildable repository” — compilers, libraries, asset pipelines — is a research resource. Document the original toolchain and build environment.

- Code Comments as Oral History: Treat internal comments as primary historical documents, as they can reveal the “why” behind technical trade-offs, and provide context for “bugs turned into features.”

- Prioritize Internal Storage: Image internal hard drives of development kits (Xbox, PS2, PC) before they are wiped. These often contain unrecorded builds and deleted workspace files not found on retail discs.

- Document Proprietary Engines: Preserve manuals and documentation for in-house engines to understand the technical factors that shaped a studio’s creative output.

Physical Preservation and Collection Management

Professional Stewardship

- Post-Custodial Archiving: Offer on-site digitization for donors who wish to retain physical heirlooms. This respects the owner’s attachment, while ensuring historical data is preserved and accessible.

- Systematic Categorization: Use clearly labeled, project-specific containers. Categorizing archives by studio, project, and media type (e.g., “Acclaim Projects,” “Simpsons Art”, etc.) significantly increases processing efficiency.

- Try to maintain vintage hardware: Maintain a separate set of vintage hardware for facilitated public play and exhibits. This not only satisfies the public’s desire for a tactile experience, but properly represents the original experience on period hardware. Use verified copies of original materials, to avoid risking the integrity of permanent collections.

- Calculated Restoration: Weigh the labor cost of internal repairs against replacement. For common hardware, purchasing a replacement from the secondary market is often more fiscally responsible than in-house restoration.

Print and Ephemera Archiving

- Analog-to-Digital Standards: Scan magazines and paper files at high resolution (600-1200 DPI) in uncompressed formats (TIFF). Use Optical Character Recognition (OCR) to ensure all text is machine-searchable. Retain the original TIFF files, as future OCR and de-screening methods might produce better results than current ones.

- Preserve Production Assets: Archive the “paste-ups,” layout link files, and original photographic negatives. These provide higher fidelity than the final printed page.

- Document Licensing Bibles: Collect merchandising guides, “style bibles,” and trade catalogs. These reveal the economic strategies and brand management that software alone does not.

Research, Journalism, and Oral History

Methodology and Sourcing

- Archive multiple sources to paint a broader picture: Cross-reference oral accounts with contemporary primary sources (trade ads, magazine previews, financial reports) to correct for “memory drift” and, unfortunately, retrospective embellishment.

- Interview more than the lead designer: Interview the “invisible” labor force — junior scripters, QA staff, localizers, and support engineers. These roles often hold more accurate “procedural memory” of technical friction.

- Identify Alternate Records: For marginalized histories (ie. “feminine” games, identity-driven games, LGBTQ+ themes/creators/mechanics, etc.), look beyond gaming press. Traditional press, zines, college materials, lifestyle magazines, fashion journals, local business registrations, and others can sometimes provide better representation.

- Utilize Academic and Peer Networks: Collaborate with domain-specific experts (e.g., Japanese culture historians, linguistics experts) to ensure nuances of language and “kawaii” trends are accurately recorded.

Interview Techniques

- Technical Prompting: Use specific technical questions about mechanics (e.g., “How did you handle the limited sprite hardware?”) to trigger deep procedural memory that general questions miss. Consulting with a domain-specific expert can help, or better yet, have the expert perform the interview.

- Acknowledge “Memory Silence”: Respect when key figures refuse to speak due to trauma or NDAs. Don’t push. If something becomes lost to history, document their silence as one aspect of how the industry can have cultures of secrecy.

- Interview with visual aids: Use original design sketches or research papers as visual aids during interviews to help veterans reconstruct the development timeline.

- Nudge, but don’t correct: It is natural for humans to forget or misremember details from 30+ years ago. Try to ask clarifying follow-up questions that don’t outright accuse the interviewee of lying or misrepresenting history.

Organizational Management and Sustainability

Institutional Ethics

- Combat “Vocational Awe”: Maintain firm boundaries between the mission and the self. Recognize that “saving history” is professional labor that requires fair compensation and personal well-being to be sustainable. (Something I learned the hard way…)

- Maintain Developer Trust: Act as a “safe harbor” for sensitive material. Establish “Dark Archives” with clear time-embargos (ie. 5-30 years) to ensure survival while respecting proprietary concerns.

- Transparent Mission Scoping: Clearly define your institution’s role (ie. Research Library vs. Playable Museum vs. Staging Area, etc.). Focus on addressed “information voids” rather than duplicating existing efforts. Look to other organization’s “bounties”, such as The Video Game History Foundation’s “call for missing items”, MobyGames’ “most wanted”, Redump/No-intro/TOSEC missing hashes, etc.

Operational Sustainability

- Professional Resilience: Build multi-channel engagement (email, Patreon, YouTube, multiple social media sites) to avoid over-reliance on a single social media platform for fundraising or communication.

- The “Monthly Membership” Model: Prioritize recurring revenue over one-off donations. A predictable financial “floor” allows for better long-term project planning and scoping.

- Institutional Redundancy: Ensure all institutional knowledge (location of files, donor contacts) exists in searchable metadata, rather than “tribal knowledge” stuck in the heads of individual founders.

Game Design and Development History

Documenting Intent and Pacing

- Archive the Paper Trail: Collect draft scripts, “branching matrix” flowcharts, research notes, and even comments in source code. These reveal the functional relationship between storytelling and design that the final binary code obscures.

- Physics-First Iteration: Document the “kinetics” of a game — the feel of jumping, flying, or acceleration. Modern research should verify that these “foundational fun” mechanics were perfected before final art was applied.

- Value the Mistakes: Treat poorly-designed or commercially failed games as primary evidence. “Evolutionary dead ends” provide a more complete map of the industry’s maturation than a simple “best-of” list.

Contextual and Online History

- Document Live Service Life Cycles: Archive patches, changelogs, and high-quality video of seasonal/ephemeral events. Documenting the “social surround” (Discord logs, Twitch streams) is essential for games that only existed in a connected state.

- Preserve Regional Realities: Branch out from Western experiences (ie. distinguish between intended speeds (60Hz) and regional realities (50Hz PAL)). Documenting the “stuttered” experience of European players contributes to accurate social history.

Legal Advocacy and Public Policy

- Evidence-Based Advocacy: Conduct rigorous research (like the 13% availability study) to demonstrate “market failure” when petitioning for copyright exemptions.

- Differentiate Access from Piracy: Clearly distinguish between institutional access (authenticated research) and commercial piracy in all legal filings, to build institutional credibility. (Ask me how I know!)

- Join Collective Networks: Collaborate to share legal resources and participate in collective bargaining for better preservation laws.

- Standardize Obsolescence Metrics: Push for clear, technical, measurable criteria for when a game, platform, or hardware ecosystem should legally count as “obsolete” for the purposes of preservation, access, and copyright exemptions. (This is important due to DMCA §1201, which confuses the simple existence of niche manufacturers as “it’s still available” just because a game client exists when the servers are long dead.)

You must be logged in to post a comment.